Despite seeing a massive growth in video traffic over mobile networks (40% CAGR), the experience for mobile viewers remains inconsistent, with issues like low video quality, high latency, and rebuffering standing in the way of smooth streaming. In this blog we focus on the challenges of delivering live video and the potential benefits of deploying video delivery functions in a mobile edge computing (MEC) environment, while considering how initiatives like AWS Wavelength enable the emergence of new and more engaging solutions for live ABR distribution. To underpin our analysis, we describe a recent field experiment Broadpeak performed with AWS Wavelength on the Verizon 5G network in Boston, where our Origin and CDN modules were used to receive, prepare, and deliver live streams captured during an NHL hockey game for the playoffs.

The Status of Live ABR Video Delivery

As OTT consumption continues to grow, leading OTT video service providers like HBO Max, DAZN, Amazon Prime, and Disney are becoming the go-to platforms for live streaming. In Europe, for instance, this trend seems to be accelerating, with DAZN recently acquiring the rights to the Italian Football Serie A and Amazon Prime acquiring the rights to the French Football Ligue 1.

In many ways, the shift in OTT consumption has enabled a broader range of devices to access content. After all, customers tend to use their own devices for streaming, while in the traditional broadcast model video is only delivered over DTT/DTH/IPTV to a set-top box. However, live OTT delivery often suffers from latency issues, with streams being served up to 45 seconds behind the traditional broadcast.

a) What Latency Are We Measuring?

Latency isn’t a new problem in the video industry, but it is worth defining what we mean by “low latency.” The table below, published by our partner AWS Elemental, shows their view on the relationship between target latency and the associated business cases.

The end-to-end latency — also called glass-to-glass latency — across the industry, is the amount of time it takes for a frame of video to transfer from the camera capturing the action all the way to the display, and it’s what we’re measuring in our experiment.

Glass-to-glass latency is basically the sum of the latency introduced by the contribution elements (getting the content from the field to the production facility) and the distribution elements (taking the content from the production facility and delivering it to the consumer). While many optimizations can be made on the contribution side (e.g., fine-tuning video compression algorithms and using specific video transport protocols, including Zixi), in this blog we are focused solely on optimizations on the distribution side, as that’s where Broadpeak’s solutions play a role.

The demarcation line, therefore, is the input of the stream coming out of the live production system. And since the playout is effectively reduced to a minimum in the experiment’s setup, our starting point is when the “OTT content origination” (aka, the origin server) receives the broadcast stream.

b) How Do We Reduce Latency in Distribution?

There are multiple initiatives across the delivery chain, but to make the biggest impact we should focus first on client buffering. If the client can play the content as soon as it is received – or with a tiny buffer – then latency will be reduced significantly. Conversely, non-optimized clients tend to keep multiple video segments in their buffer.

The industry’s response has been the introduction of CMAF-CTE: Common Media Application Format (CMAF) specifies a container format and introduces the concept of smaller chunks (of a few hundreds of milliseconds) within video segments (of multiple seconds). It is applicable to both DASH and HLS.

Combined with chunked transfer encoding (also called CTE), CMAF-CTE makes it possible to quickly move video chunks through the distribution path. The concept is to send the chunks as soon as they’re available. Each can be immediately published upon encoding, which allows the client to access the video before the segment is finished — achieving a near real-time delivery.

The result coming out of the content delivery network (CDN) is a faster video transfer through more consistent bandwidth usage, instead of bursts of activity and inactivity on the connection. This is achieved today with an origin packager capable of creating the small CMAF chunks, a CDN supporting CTE, and video players supporting both CMAF and CTE. For CMAF-CTE support in our experiment, we used the Broadpeak BkS350 Origin Packager and BkS400 CDN cache.

c) With CMAF-CTE Being the Answer, Is the Latency Problem Entirely Solved?

Since network bandwidth is not guaranteed in OTT delivery, the video player effectively delays the content playback to build a buffer of content to play if the bandwidth was to drop during the live streaming session. In effect, the more unpredictable the delivery is, the larger the client buffer should be, and the higher the latency will be.

So, while low-latency technology is available, it’s worth noting that it hasn’t yet been deployed at scale for broadcasting events because it still carries some risk: with very little content in the client buffer, a bigger problem can present itself: an on-screen freeze followed by a rebuffering event.

To be clear, this is significantly worse than a few seconds of latency. First the playback is interrupted, and we know that this can lead to stream abandonment and sometimes even customer churn. Then, a freeze of a few seconds means that those seconds become additional latency for the video session. So, being too aggressive on latency to improve the viewing experience can effectively backfire, leading to the opposite situation where the user experience is far worse.

In the end, low latency in production is about finding the right buffering balance.

How Does MEC Help Live ABR Delivery?

a) Low-Latency Video Requires a High-Quality Network

When trialing CMAF-CTE technology with our customers, it became clear that low latency puts more emphasis on the quality of the network. When delivering video over a perfect network (say, for example, in a customer lab), we can be very aggressive with the buffer size, hence with the latency in the client. But lab results for low-latency testing are usually meaningless, as they do not account for the network problems in real-life deployments. The reality is that a buffer level is needed in the client to make up for network imperfections and avoid on-screen freezes. That leads us to an interesting question: How can I improve the quality of my network? Is there a way to make the network nearly perfect?

b) How Do We Make the Network Perfect?

When striving for low latency, buffers in the players are set to very low levels, so any hiccup in delivery will have an impact on the user experience. So, for many years CDNs have been deployed to cache content close to consumers, reducing the network distance content must travel to reach them. In effect, CDNs have always been distributed applications, primarily deployed to reduce network costs and increase the QoE for consumers.

If we take the concept of a CDN and push it further towards consumers, we end up with new CDN caches deployed closer to the edge of the network. In effect, we multiply the number of CDN locations closer to consumers so that content doesn’t have to travel very far at all. This dramatically reduces the risk of network problems and makes video delivery more deterministic. With more reliable delivery, it then becomes possible to reduce the size of the buffer inside the video client and to deliver low-latency video services. However, introducing new edge locations where the CDN can be deployed begs the classic CDN question: Where is the optimal placement for CDN caches on the network to reduce costs and increase the QoE?

c) Why Should We Use the Cloud?

The drawback of distributing CDN caches deeper in the network is having to deploy a costly infrastructure, which requires hundreds of CDN points of presence (PoPs) compared to the tens of CDN PoPs needed today. Therefore, we believe that mobile edge caching is better suited to live premium streams to meet expectations for low-latency delivery and to avoid rebuffering events.

The approach of virtualizing and containerizing the CDN application allows operators to deploy the CDN dynamically on the network. This is precisely where cloud technologies help deliver the services with greater speed and efficiency: The CDN delivery capacity can be provisioned temporarily where needed and when needed. At Broadpeak we believe that cloudification – the transformation of our solutions in a cloud-native approach – is a key aspect of the network transformations brought by the introduction of 5G technology.

Today the Broadpeak portfolio can be deployed in many virtualized and containerized environments. Coupled with future orchestration capabilities, the solution will in time become very elegant, with the ability to auto-scale based on customer demand.

d) What Is AWS Wavelength?

AWS Wavelength is an AWS infrastructure offering, optimized for MEC applications. Wavelength Zones are AWS infrastructure deployments that embed AWS compute and storage services within communications service providers’ (CSP) datacenters at the edge of the 5G network, allowing application traffic from 5G devices to reach application servers running in Wavelength Zones, without leaving the telecommunications network. This avoids the latency that would result from application traffic having to traverse multiple hops, transit network segments, and peering points across the Internet to reach their destination, thus enabling customers to take full advantage of the latency and bandwidth benefits offered by modern 5G networks.

AWS Wavelength Zones are available in ten cities across the U.S. with Verizon; in Tokyo and Osaka, Japan with KDDI; in Daejeon, South Korea with SKT; and in London, UK with Vodafone.

A Field Experiment With AWS Wavelength and the NHL

a) Experiment Setup

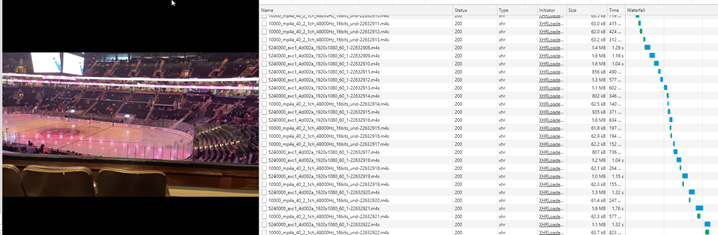

The concept of the experiment is to distribute content captured during the NHL game inside the ice rink, to consumers near the venue, who may be gathered in a fan zone, for example. The idea is to rely on AWS Wavelength as a “local” datacenter that can receive the content via 5G and quickly turn it around as a low-latency stream consumable by fans connected with their 5G devices to this AWS Wavelength instance. To do so, Broadpeak deployed its BkS350 Origin Packager and a small-scale BkS400 CDN instance inside the AWS Wavelength Zone in Boston.

A live video feed is initially captured from a 5G smartphone and sent over 5G to the AWS Wavelength for transcoding using the Zixi protocol. This is effectively the source of the content ingested by the Origin Packager. Once the content is ingested into the Broadpeak Origin Packager, it creates two live streams out: One in low latency using the low-latency HLS (LL HLS) format (using CMAF) and one in the regular (non-low-latency) HLS format.

b) The Low-Latency Output Using LL HLS

We chose to use LL HLS as the delivery format for our experiment so that streams could easily be played back using the native iOS video player (AVPlayer). For this experiment, we deployed the Broadpeak Origin Server capable of creating LL HLS streams.

LL HLS is an extension to the HLS protocol — developed to deliver the same simplicity, scalability, and quality as HLS, while also reducing latency to less than two seconds. LL HLS introduces further optimizations beyond CMAF. For example, the information about CMAF chunks (~500-ms video) is published in the manifest before the segment is complete. This allows delivery to happen before the segment is fully packaged, saving 1.5 to 5.5 seconds, depending on the segment duration. To further reduce latency, the approach adopted in LL HLS is to send the request for the video chunk in anticipation, rather than sending the request once the segment is known to be available. As the requested segment or manifest may not be available when the server receives the request, the server holds that request until it becomes available. This way, the server can send the video data as soon as it becomes available.

LL HLS has been standardized since April 2020 and been adopted by the industry since the iOS and tvOS 14 update in September 2020.

c) Delivering Low-Latency Video to Mobile Clients Using an MEC Instance of CDN

One of the major value propositions of MEC is the reduction of network latency —the time it takes to physically go from the CDN cache to the end consumer. At the network level, latency is measured in milliseconds, and doesn’t directly compare with the latency measured at the video level in seconds or tens of seconds.

But there is a clear correlation between the quality of the connection (and its potential throughput) and the distance the packets must travel. See, while milliseconds don’t delay the video delivery, using only a reduced portion of the network provides a big advantage: This section of the network is very unlikely to be congested, and is therefore less risky for delivering a low-latency stream. Having the CDN cache deployed inside AWS Wavelength effectively secures low-latency video delivery.

Broadpeak leverages the AWS Wavelength Zone to serve all requests when the end-user is localized in a 5G coverage area. The Broadpeak CDN cache (BkS400 installed in the AWS Wavelength Zone) delivers content using the LL HLS behavior described earlier.

It is interesting to note that Apple mandates using HTTP/2 for low latency to the AVPlayer. So, the delivery performed by the BkS400 uses HTTP/2 for transport, moving away from HTTP 1.1, which was standardized 20 years ago.

In a context where multi-CDN and CDN selections are now the norm, bringing these innovations to the on-net CDN is very important for the consumer’s QoE.

d) Delivering Video to Mobile Clients Using a Standard CDN

In parallel to the low-latency path inside the AWS Wavelength Zone, the origin server also offers the streams to other third-party CDNs for broader coverage. The non-low-latency version of the stream (standard HLS) is being used for that, as the streaming conditions are unlikely to be optimal.

Where Is the Value and Who Would Use Broadpeak on AWS Wavelength?

a) What Does it Mean for the Future?

We know that video service providers are considering new ways to alleviate bandwidth problems with MEC, where CDN caches are deployed very close to consumers, resulting in an improved video experience. Initiatives like AWS Wavelength are the first opportunities to deploy CDN caching in MEC and they are proving the theory right.

With caching embedded in the mobile edge infrastructure, video service providers can deliver more throughput to consumers and virtually eliminate network congestion. Going forward, this enables premium live ABR experiences at scale with almost no latency and opens the door to more bandwidth-hungry video applications like VR, 4K, and 8K in the future.

b) Video Content Providers Are the First Beneficiaries of the Solution

Clearly OTT video content providers, who today face challenges delivering live ABR events at scale over mobile networks, would greatly benefit from the solution proposed here.

First, their consumers get an outstanding video experience, with nearly guaranteed delivery: Content is streamed from neighboring locations over a shortened connection offering higher throughputs and putting an end to congestion. Consumers get quicker access to content, higher average bit rates, fewer rebuffering events, and improved low-latency performance.

Second, content providers have full visibility on their delivery; cache instances are private local caches that they can control. This means access to all the monitoring and analytics features required to provide deep insight into the streaming services.

Third, this private CDN is deployed and operated inside an AWS environment that content providers are most likely very familiar with. The unified AWS self-service portal and products hide the complexity of the multiple operator networks, which is essential for content providers targeting a global reach.

For more information, you can visit the Broadpeak partner booth on the AWS Virtual Village online during Mobile World Congress 2021 from 28th of June to 1st of July here: https://village.awsevents.com.

With AWS Wavelength expanding globally into several 5G networks, now is the time to trial the technology. Contact us today to deploy this technology for your mobile consumers, and give your content the edge!